I want to echo John Carmack’s tweet that all giant companies use open-source FFmpeg in the backends. FFmpeg is a core piece of technology that powers our live-streaming and recording system at Inspire Fitness. It certainly is high-quality open-source software that we use to record and stream countless hours of workout videos.

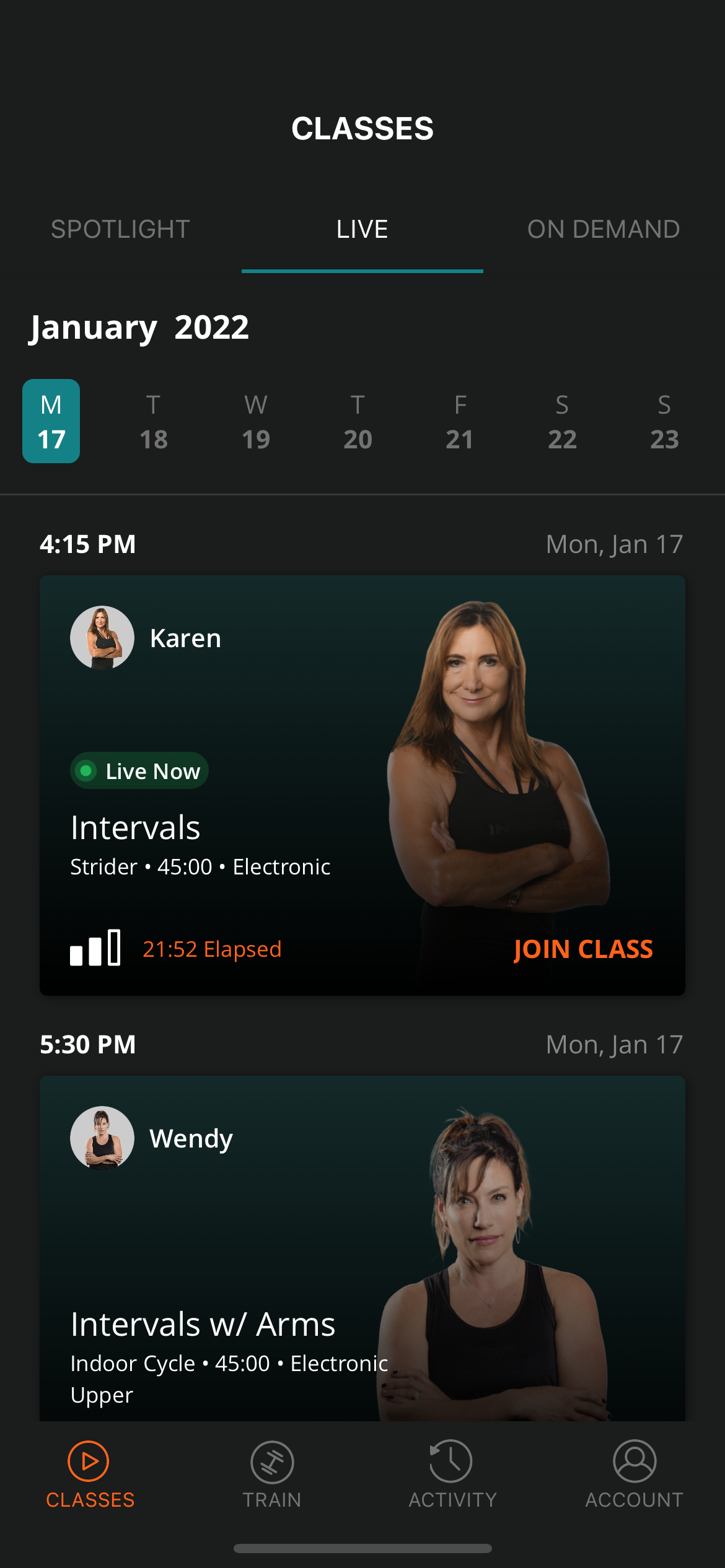

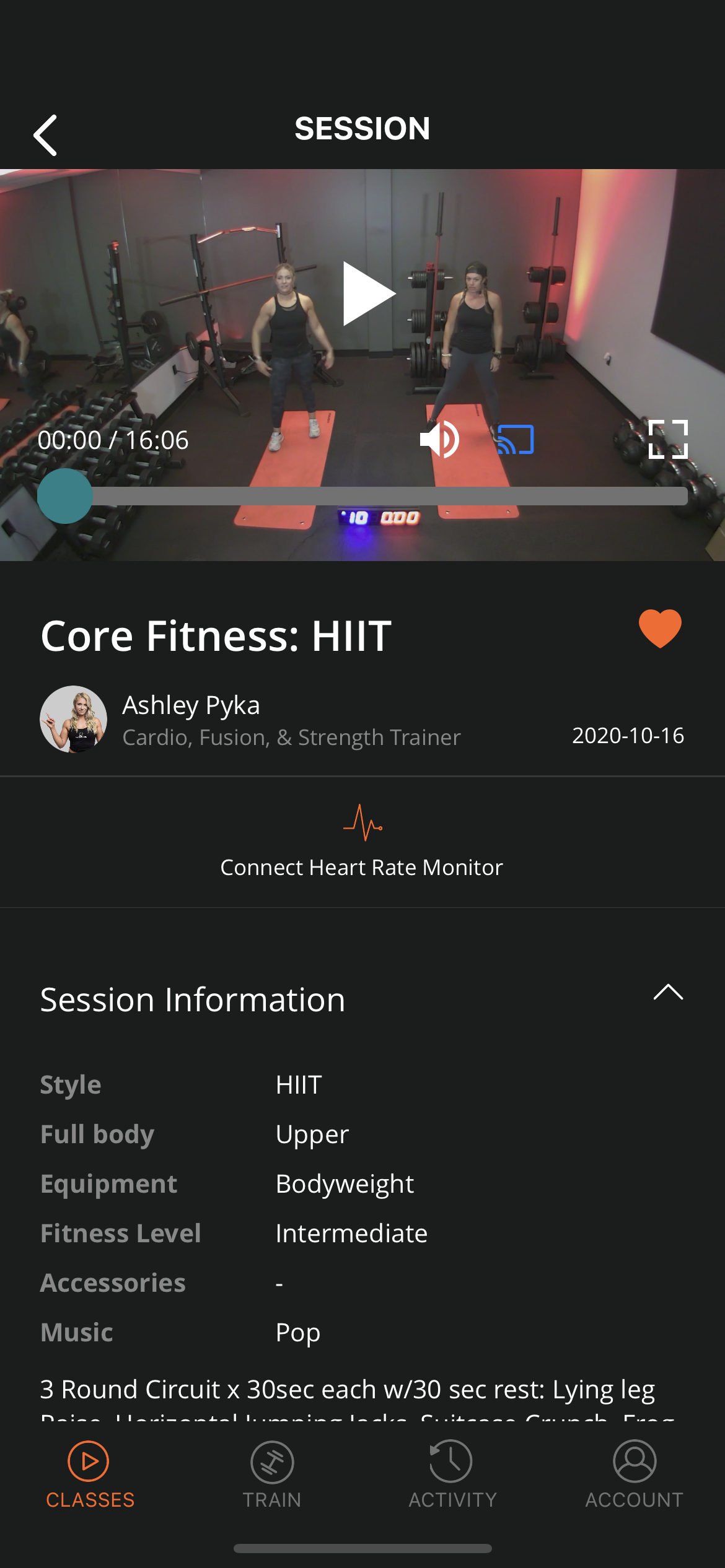

It looks like this:

Users can:

- watch live-streaming content, or

- playback on-demand videos from our content library

High level

Behind the scene, we have:

- IP cameras are wired up in each studio room.

- The cameras support the RTSP protocol.

- A software pipeline integrated with our custom CMS to broadcast (stream) our cameras feed to the internet while simultaneously recording and storing the content to AWS S3 storage for on-demand playback.

How it all works together:

We configure classes (the recordings) to start at a particular time in our dashboard. The time aligns with our studio schedules, where gym members would often join our classes to work out alongside the instructors.

We kick off a dedicated ec2 instance with FFmpeg baked in an AMI image when the class starts. We call this our encoder/transcoder.

As soon as the ec2 boots up, it runs the cloud-init script, which starts the Clojure process and mount (a state management library) that would then starts the dependencies:

This would in turn calls

start-stream:The

start-streamlogic is actually pretty simple. It pulls feed from our camera and egress out our CDN partner The output would be a playback URL that our video player would be pulling from.

Notice we are essentially invoking the FFmpeg that we bundled earlier to:

- take input from RTSP transport protocol

- argument flags for the video/audio codec

| Flag | What it does |

|---|---|

| -re | read input at native frame rate. Mainly used to simulate a grab device i.e if you wanted to stream a video file, then you would want this, otherwise it might stream too fast |

| -c:a aac | transcode to AAC codec |

| -ar 48000 | set the audio sample rate |

| -b:a 128k | set the audio bitrate |

| -c:v h264 or copy | transcode to h264 codec or simply send the frame verbatim to output |

| -hls_time | the duration for video segment length |

| -f flv | says to deliver the output stream in an flv wrapper |

| rtmp:// | is where the transcoded video stream get pushed to |

The code is essentially a shell wrapper to FFmpeg command-line arguments. FFmpeg is the swiss-army tool for all video/audio codecs

The whole encoder.clj is about 300 lines long with error handling. It handles file uploads (video segment files, FFmpeg logs for debugging), egress to primary and secondary/fallback RTMP slot, shutdown processes, and the ec2 instance when we are done with the recording.

Lesson learned

This was a rescue project from Go to Clojure. The previous architecture had too many moving pieces, making HTTP requests across multiple micro-services. The main server would crash daily due to improper handling of WebSocket messages, causing messages to be lost and encoder instances not starting up on time.

The rewrite reduced the complexities. Simple Made Easy as Rich Hickey.

Rewriting a project is never a good approach considering the opportunity cost. I evaluated a few offerings: mux.com, Cloudflare Stream, Amazon IVS. On paper, they have all the building blocks we need. In addition, some have features like video analytics, policing/signing playback URL, which would be useful for us.

Ultimately, the fact that we still had 2 years contract with the CDN company was why we still manage our encoder.

In hindsight, if we consider the storage costs and S3 egress bandwidth fee on top of the CDN costs, I would probably go for ready-made solutions for our company stage. I would start optimizing when we have more traffic.

The good thing is that this system works really well for live-streaming workload with programmatic access. (Sidenote: barring occasional internet hiccups in our studio).

If you have a video production pipeline that involves heavy video editing, going with prebuilt software could be more flexible until you solidify the core functionalities.

Special thanks to:

- Daniel Fitzpatrick and Vincent Ho. My coworkers helped proofread this article and maintain the broadcast system. I enjoy working with you both ❤️. We even have automated tests to prove the camera stream is working end to end!

- Neil, our product manager, understands tech trade-offs and works with me to balance the product roadmap.

- Daniel Glauser, who hired me for this Clojure gig